This is Part 2 of a two-part series evaluating a recent scientific study on medication for low back pain and dementia. In Part 1, we talked about why this study asks the wrong question. Today, we’ll talk about why it (probably) gets the wrong answer even for the question it asks.

On Monday, I wrote about why a recent paper claiming that gabapentin use for low-back pain might be causing dementia wasn’t asking the right question. Or, at least, that it didn’t give us enough information to understand what question exactly it was asking.

Today, I’m going to explain why the statistical analysis they use is fundamentally flawed and almost certainly gives the wrong answer even if their question had been good. This is going to be a bit more technical than some of my posts, but it’s important so I’ll do my best to make it understandable.

What you need to know about data analysis in general

You don’t need to know math to understand the problem here. What you do need to know is that there are two types of data analysis that we can do.

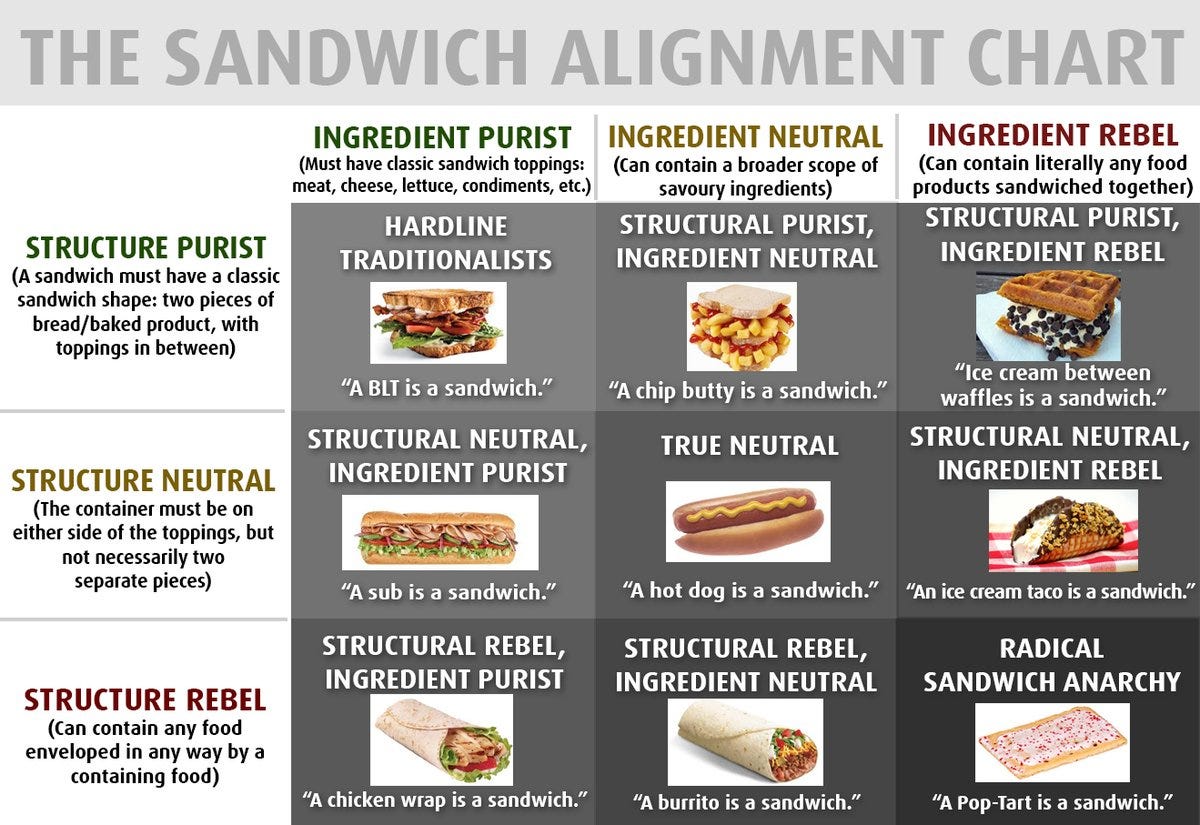

The first option is to assess a statistical correlation. This is easy. You can do it in a million different ways. Some are better and some are worse. But in general, if the analysis gives you a result, that result has a statistical meaning. But statistical meaning is not always useful for the real world. Saying “two things are correlated” is like saying “I ate a sandwich”. You can still claim that even if you ate a pop-tart, but most people are probably thinking of something closer to a BLT. There are lots of different ways something can be a sandwich; some are better and some are worse.

The second option is to estimate a cause and effect relationship. This is hard. There are many fewer ways to do it. And each approach requires certain specific criteria (we call these assumptions) to be met. If the criteria are not met, then even if the analysis gives you a result, that result has no causal meaning. In terms of Sandwich Alignment, this means agreeing first on a set of ingredient and structure criteria, and then only calling our food a sandwich if it meets those criteria. The stricter the criteria, the fewer options there are for what counts as a sandwich.

What you need to know about the data analysis in this study

Okay, so how does this sandwich alignment analogy help us understand a study of gabapentin and dementia in back pain patients? Well, the bottom line is this: the authors use a method that requires a specific set of causal criteria, but the data do not match those criteria. So the results of the analysis aren’t causal.

In sandwich terms, if we all agreed that sandwiches must meet either the structure purist or the ingredients purist criteria, then pop-tarts aren’t sandwiches because they don’t match those criteria.

In the case of this study, the method the authors use is called propensity score matching. If you’re not an epidemiologist or statistician, you’ve probably never heard of this method. That’s okay because you don’t need to know the details to know why it’s the wrong choice here.

What you do need to know is that like almost every other data analysis method1, propensity score matching requires that the treatment or exposure you are studying does not change over time. This is an absolutely required criteria.

But the study authors look at gabapentin prescriptions over time. Some people get only a few prescriptions. Other people get lots. Gabapentin prescription changes over time. This doesn’t match our required criteria. So the results of the analysis aren’t causal.

The bottom line

The authors used the wrong analysis method for the data they had. As a result:

If there is no true effect of gabapentin on dementia, this study might still (incorrectly) conclude there is an effect.

If there is a true effect of gabapentin on dementia, this study might (incorrectly) conclude that there is no effect, or it might (incorrectly) estimate a bigger effect than the true effect, or might even (incorrectly) estimate a smaller effect than the true effect.

The study claims to have found an effect, but we cannot be at all confident that an effect truly exists.

If the study had not found an effect, we would also not have been able to be confident that an effect truly did not exist.

If you’re only interested in making sense of health news headlines, and occasionally reading other people’s research, you can stop reading here.

If you want to understand how research can be done better, either because you read other people’s research lots or because you do your own research, then keep reading. Let’s talk about what the authors could have done instead.

Keep reading with a 7-day free trial

Subscribe to E is for Epi to keep reading this post and get 7 days of free access to the full post archives.